Brij kishore Pandey on the Rise of Agentic AI

A deeper take on Brij kishore Pandey's viral AI stack post, explaining the shift from generative AI to agentic systems.

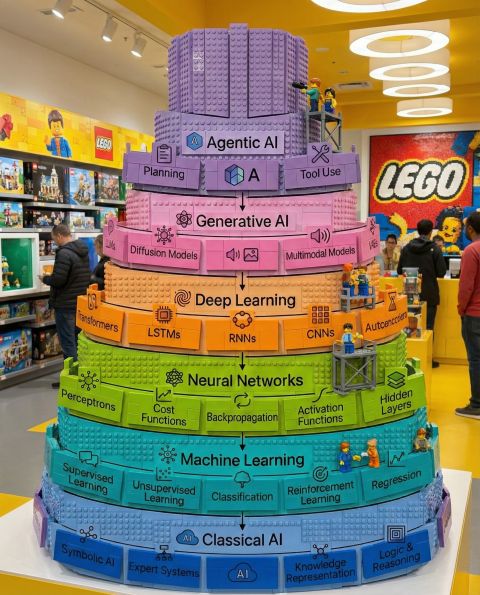

Brij kishore Pandey recently shared something that caught my attention: AI is evolving like "a set of building blocks," and while we spent years on the foundation, "the shift at the very top" is what should matter most right now.

He framed it in a way that instantly clicked for me: "We are currently graduating from the Pink Layer (Generative AI) to the Purple Layer (Agentic AI)." Then he highlighted the keywords that define the new layer: "Planning," "Tool Use," and "Autonomous Execution." That is the real change in the conversation.

In this post, I want to expand on what Brij is pointing to, because it is not just another AI buzzword cycle. It is a shift in what we expect software to do.

The AI stack as building blocks (and why the top matters)

Brij's building-blocks metaphor is useful because it prevents two common mistakes:

- Treating every new AI capability as a standalone revolution

- Assuming the next layer replaces everything underneath

In reality, each layer builds on the previous one. We still need statistics, optimization, and evaluation. We still need machine learning pipelines, data quality, and monitoring. But the new frontier changes the interface between humans, models, and software.

"Notice the difference in keywords in that top purple layer: "Planning," "Tool Use," "Autonomous Execution.""

That difference in keywords implies a difference in product design. Generative AI is great at producing content. Agentic AI is about producing outcomes.

Layer 1: The Foundation (Classical AI and Machine Learning)

Brij describes the foundation as the era where we taught computers "rules, logic, and patterns" with an emphasis on "classification and prediction." Even now, most business value from AI is still rooted here.

Think about:

- Fraud detection models predicting probability of chargebacks

- Demand forecasting for supply chain planning

- Customer churn classification to trigger retention campaigns

- Computer vision models classifying defects in manufacturing

These systems are powerful, but their behavior is constrained. You give them data, they return scores, labels, or predictions. A human or downstream system decides what to do next.

The important context: this layer is not going away. In fact, agentic systems often depend on classical ML components for ranking, anomaly detection, routing, or confidence scoring. Agentic AI sits on top, coordinating actions that may rely on many non-agent models.

Layer 2: The Explosion (Generative AI)

Brij calls the last couple of years "The Explosion" because LLMs, diffusion models, and VAEs gave us the power to create: "text, code, images, and video." That is exactly how it felt in practice.

Generative AI changed the default UI for software. Instead of menus, forms, and rigid workflows, you can type or speak intent. Instead of "learn the tool," the tool starts to learn your request.

But generative AI, by itself, has a ceiling:

- It generates outputs, but does not reliably finish end-to-end tasks

- It can draft, summarize, and suggest, but it often stops at "here is what you could do"

- It is reactive unless you wrap it in a system that provides context, tools, and feedback loops

A simple example: an LLM can write a great incident postmortem. But it cannot, on its own, pull logs, correlate metrics, file tickets, and verify that a fix actually reduced error rates. That is where the next layer starts.

Layer 3: The Frontier (Agentic AI)

Brij calls the top layer "The Frontier (Agentic AI)," and I like that phrasing because frontiers are exciting and risky at the same time.

At a practical level, an "agent" is not just a model. It is a system that can:

- Maintain state (memory)

- Decide on steps (planning)

- Take actions (tool use)

- Observe results and adapt (feedback)

- Work toward a goal with limited supervision (autonomous execution)

Or, in Brij's words:

"We are no longer just asking models to generate a poem or an email. We are building systems that have agency."

Memory: more than chat history

When Brij says agents can "retain memory of past interactions," the key is: memory needs structure.

There is short-term memory (what is in the current context window), and long-term memory (what the system retrieves from a database, vector store, CRM, ticketing system, or document repository). In real products, memory also includes preferences, permissions, and a record of actions taken.

Without disciplined memory design, agents either forget critical context or invent it. With good memory design, they become consistent collaborators.

Planning: turning intent into steps

Planning is the bridge between "What do you want?" and "What should I do next?"

A planning-capable agent can take a goal like "prepare a weekly pipeline review" and break it down:

- Pull CRM data

- Validate missing fields

- Segment by stage, owner, and close date

- Draft insights and risks

- Create slides

- Send to stakeholders for review

Planning does not mean the agent always gets it right. It means the system can propose a workflow, ask clarifying questions, and revise the plan based on constraints.

Tool use: the difference between talk and action

Tool use is where agentic AI becomes operational. Tools can be:

- API calls (CRM, ERP, Slack, Jira, GitHub)

- Database queries

- Browsers and RPA actions

- Code execution in a sandbox

- Search and retrieval

This is also where reliability, security, and governance become non-negotiable. The moment an agent can create a ticket, change a setting, or trigger a deployment, you need strong guardrails: authentication, authorization, logging, rate limits, and human approval when stakes are high.

Autonomous execution: outcomes, not drafts

Autonomy is a spectrum. Many of the best "agentic" systems today are not fully autonomous. They are semi-autonomous: they execute steps, but pause for approvals at key points.

That is often the right design. You can get speed without giving up control.

What changes for builders and teams

Brij's stack implies a shift in what we build and how we measure success.

1) From prompts to workflows

Prompting matters, but it is not the whole game anymore. Agentic systems are closer to product engineering: state management, tool integrations, error handling, retries, and observability.

2) From "accuracy" to "task completion"

Generative AI evaluation often focuses on response quality. Agentic evaluation adds:

- Did the system complete the task?

- How many steps did it take?

- How often did it ask for help?

- Did it follow policy?

- Did it cause any unintended actions?

A useful metric is cost per successful outcome, not cost per token.

3) From "model choice" to "system design"

Model selection still matters, but architecture matters more: planners vs executors, tool routers, memory stores, and safeguards.

In other words, the most durable advantage will come from systems thinking, not just access to the newest model.

Real-world examples of agentic AI (in plain language)

To make the purple layer concrete, here are a few examples where memory, planning, and tool use show up together:

- IT support agent: reads a ticket, asks clarifying questions, checks device posture, runs approved diagnostics, proposes a fix, and documents the outcome

- Sales operations agent: monitors pipeline hygiene, nudges owners, updates fields from emails, and prepares weekly summaries

- Data analyst agent: receives a question, generates a query, runs it, validates anomalies, creates a chart, and writes a narrative summary

- Developer productivity agent: triages issues, reproduces bugs in a sandbox, proposes a patch, runs tests, and opens a PR for review

None of these should be fully autonomous by default. But each can be meaningfully "agentic" while still operating within constraints.

The risks to take seriously

If we are graduating from generative to agentic, the failure modes also change.

- Hallucinated actions: not just wrong text, but wrong operations

- Permission leakage: agents accidentally accessing or sharing data they should not

- Hidden brittleness: a workflow breaks when an API response format changes

- Goal misalignment: the agent optimizes for speed or completion, not correctness or safety

The antidote is engineering discipline: least privilege access, audits, step-level validation, sandboxing, and human-in-the-loop checkpoints.

What I think Brij is really signaling

When Brij says the "shift at the very top" deserves attention, I read it as a warning and an invitation.

It is a warning because agentic systems will move faster than many organizations' governance processes.

It is an invitation because this is where new categories will form: agent operating systems, tool ecosystems, agent testing frameworks, and domain-specific agent products.

If generative AI was about creating content, agentic AI is about creating leverage.

The purple layer is not just smarter text. It is software that can plan, use tools, and execute work.

That is the building block that changes how teams operate.

This blog post expands on a viral LinkedIn post by Brij kishore Pandey, AI Architect | AI Engineer | Generative AI | Agentic AI | Tech, Data & AI Content Creator | 1M+ followers. View the original LinkedIn post ->